Initial Release on HuggingFace

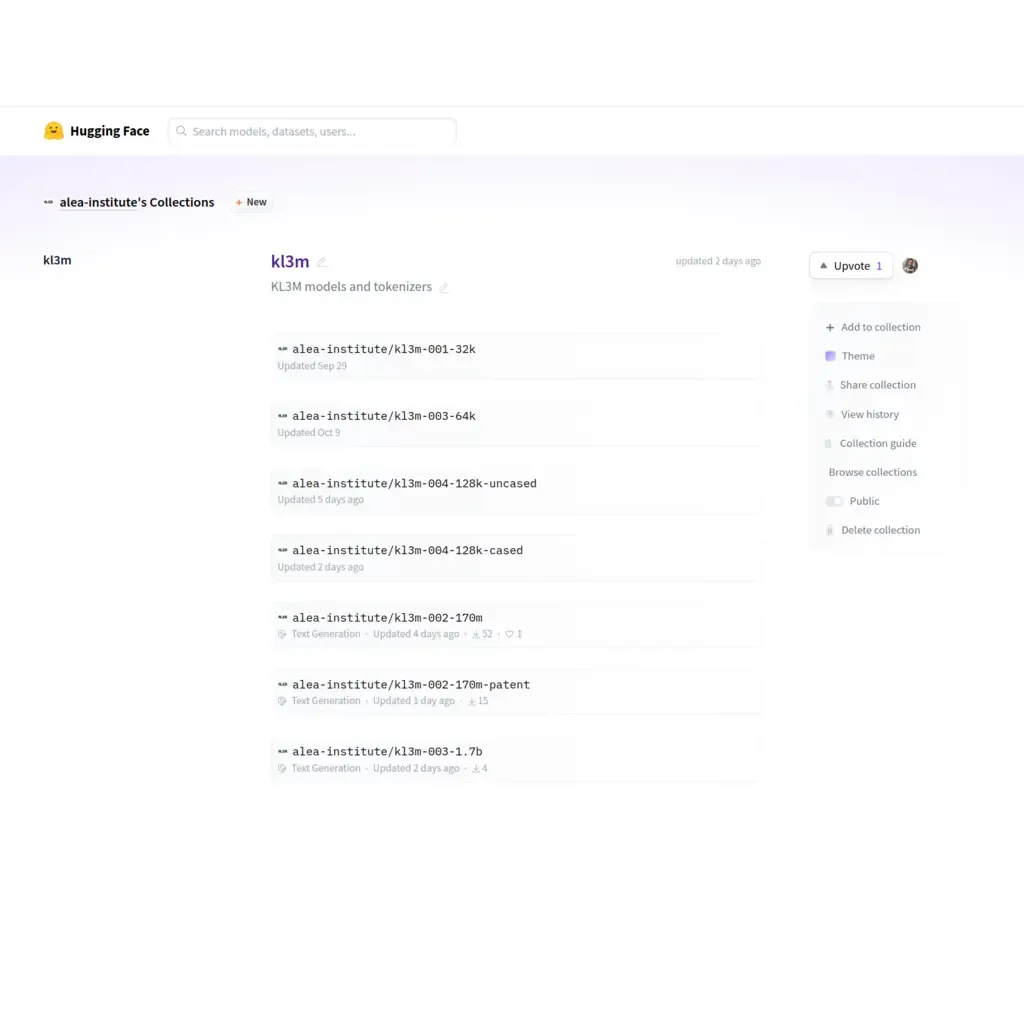

We’re excited to announce that KL3M tokenizers and models are now publicly available on HuggingFace, the leading platform for sharing LLMs.

We’ve begun by opening up our first four tokenizers and original two generative models, kl3m-002-170m and kl3m-003-1.7b, for public research and use, as well as the fine-tuned All the Patents model.

These models, which were the first text models to obtain Fairly Trained L-Certification, were used in our early research and development efforts around domain-specific pretraining efficiency and toxicity and bias.

We’ll be completing the release of our older KL3M models with the original 3.7B MoE and 7B models later this month. These larger models, while multilingual, are relatively under-trained compared to the smaller models, which saw substantially more training time and data.

Complete replication, from data collection to pretraining, will soon be available as part of the kl3m-004 family. This data is already available on S3 under a Requester Pays model, and we are working with infrastructure providers to make this data available at zero cost to the public.

All tokenizer and model resources will be conveniently located under the KL3M Collection here.

The Tokenizers

kl3m-001-32k

- 32K vocab trained on legal and financial text

- Trained on ~500B tokens from primarily English sources

- Notable for domain-specific vocab and alternative whitespace handling related to citation formats

- HuggingFace | GitHub

kl3m-003-64k

- 64K multilingual vocab trained on general, legal, and financial text

- Trained on ~1.5T tokens across English, German, Spanish, French, and other EU languages

- Used for second generation KL3M models (3.7B, 7B)

- Enhanced citation format support vs 001 tokenizer

- HuggingFace | GitHub

kl3m-004-128k-uncased

- 128K multilingual case-insensitive vocab

- Trained on stratified 4M document sample across, legal, financial, technical, and general text

- Supports English, Spanish, German, French, Italian, other EU languages

- Currently recommended for embedding and deduplication use cases

- HuggingFace | GitHub

kl3m-004-128k-cased

- 128K multilingual case-sensitive vocab

- Trained on stratified 4M document sample across, legal, financial, technical, and general text

- Currently recommended for most generative use cases

- HuggingFace | GitHub

The Models

kl3m-002-170m

- A 170 million parameter small language model (SLM)

- 4,096 token context window

- Trained on clean, legally-permissible data

- Optimized for legal, regulatory and financial workflows

- Runs real-time in fp32 on MacBook Air M1

- HuggingFace | GitHub

kl3m-003-1.7b

- A 1.7 billion parameter model

- 8,192 token context window

- Built on the same legally-permissible dataset

- Enterprise focus for legal/regulatory use cases

- Runs real-time in bf16 on consumer GPUs

- HuggingFace | GitHub

kl3m-002-170m-patent