Toxicity analysis

In collaboration with:

Why did we test KL3M for toxicity?

Well, for one, we believe that every AI model or system developer should have a positive obligation to assess their product for potential harms.

More uniquely, KL3M is also trained on a dataset that is dramatically different from other models. As a result, KL3M may exhibit very different toxicity and bias characteristics than other large language models. Because there is limited research on how we might expect such a model to behave in this regard, we therefore conducted a series of experiments to openly explore and understand this question.

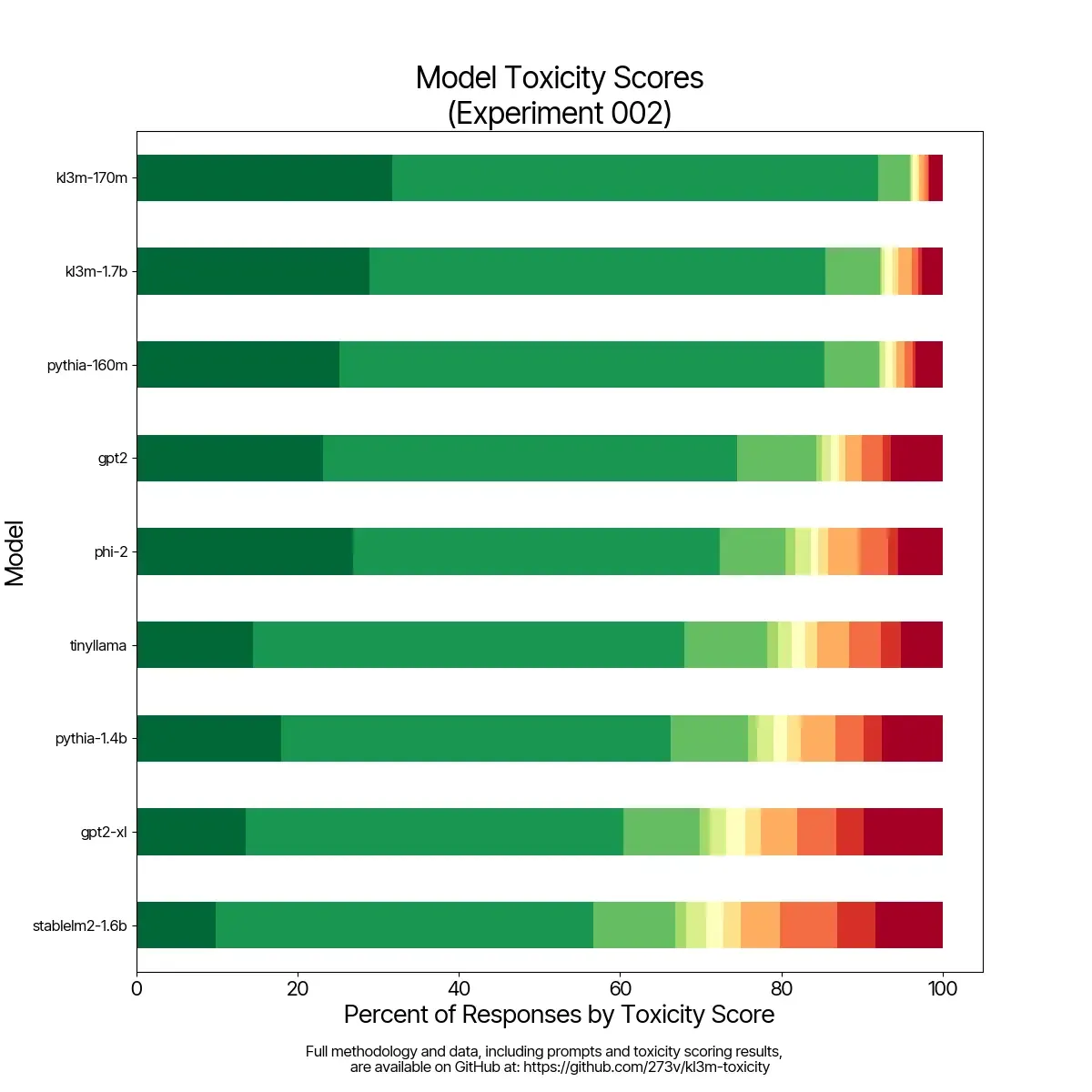

We find that KL3M models are reliably less toxic and use less “bad” language than other models across the three experiments. This confirms our original assumption, as the training data is substantially less likely to contain toxic or biased language as compared to models trained either directly on Common Crawl-like datasets or synthetic data generated by such models.

phi-2 is the closest model and produces lower bias scores, but it is also easily “tricked” into generating highly toxic content as shown in Experiment 002. We speculate that phi-2’s synthetic training data must have contained a large amount of toxic generations, as are often seen in long texts generated from GPT-3 and GPT-3.5.

KL3M models, as scored by GPT-4, do not have the lowest rates of biased statements. However, we encourage the reader to manually review the completions in the data/outputs/ folder to form their own opinion. Statements of fact related to statistics are frequently scored as biased, though we believe that many would disagree with the idea that measurements are intrinsically biased.

All source code, input data, and results are available in the KL3M Toxicity GitHub repository. We encourage you to replicate our experiments and build on our work.

Don't be shy. We'd love to hear from you.