The first LLM to receive the Fairly Trained certification.

In collaboration with:

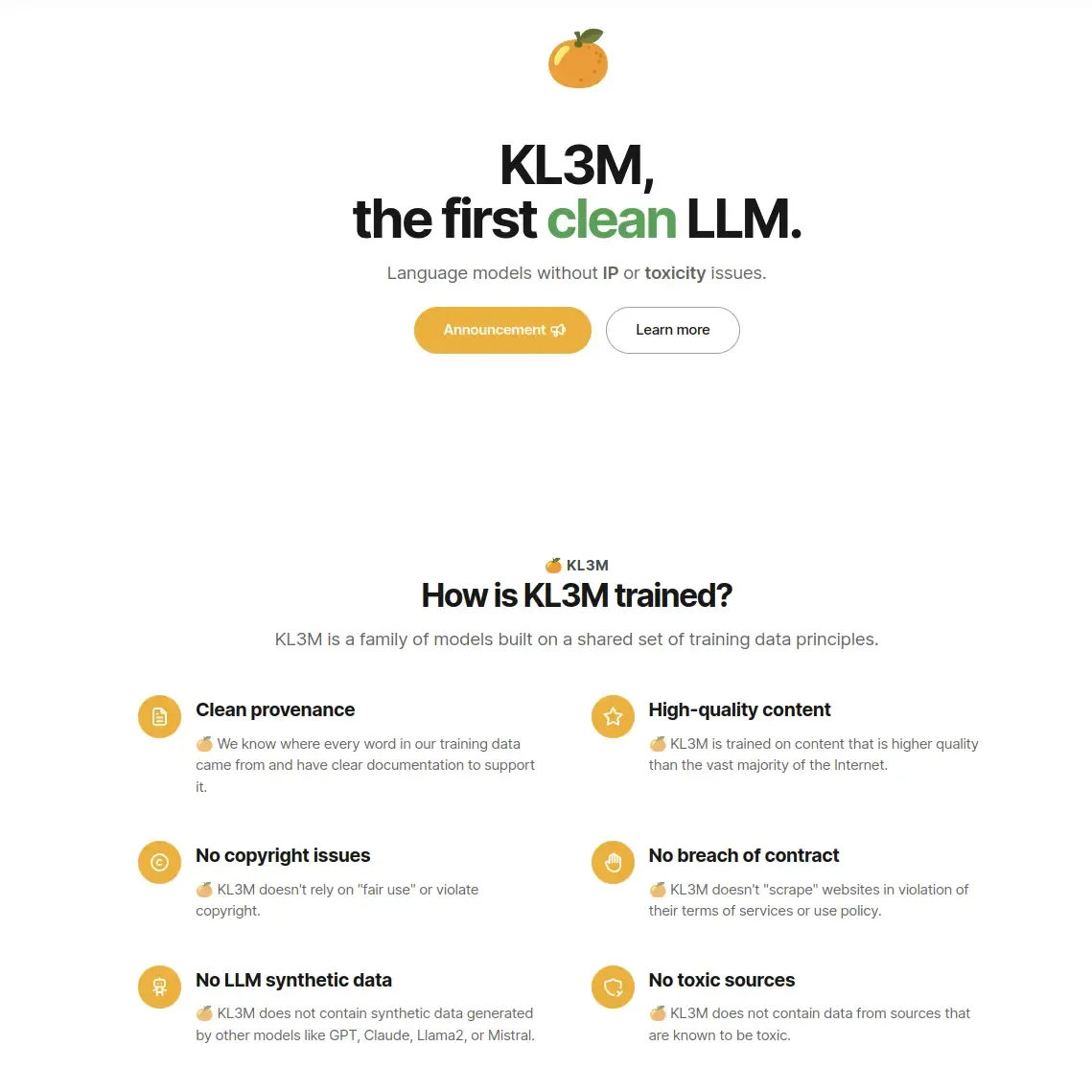

The ALEA Institute is proud to steward KL3M, the first Fairly Trained large language model family, for public benefit.

Originally developed by our team under 273 Ventures LLC in Q4 2023 and Q1 2024, KL3M was donated to ALEA in August 2024.

The ALEA Institute is committed to maintaining and further developing KL3M, with the goal of promoting its adoption and use as the gold standard for legal and ethical AI model development.

As an open data, open source, open weights model under its new stewardship, we invite you to learn from or build on KL3M for yourself.

KL3M is notable for several key reasons:

More information is available at the KL3M homepage.

Don't be shy. We'd love to hear from you.